Biochip Chatter Tool

I developed a Blueprint-driven biochip animation system in Unreal Engine that exposes jaw state and backlight parameters directly to Sequencer, allowing them to be keyed per shot and driven by editorial data.

I developed a Blueprint-driven biochip animation system in Unreal Engine that exposes jaw state and backlight parameters directly to Sequencer, allowing them to be keyed per shot and driven by editorial data.

The system is built around a base Biochip Blueprint that represents the biochip on its own, containing all shared logic for jaw state control and backlight behaviour, with the relevant parameters exposed to Sequencer. From this base class, I created child Blueprints that represent the biochip when mounted on different equipment, specifically the backpack, helmet, and gun. This approach allowed the same underlying system to be reused across all contexts while keeping equipment-specific behaviour and presentation cleanly separated.

While the core mesh and rig were authored by other team members, I was responsible for the Blueprint architecture, Sequencer integration, and automation workflow. I also made targeted adjustments to the biochip material to ensure the pixel-based skull accurately matched the concept art, including correct pixel scale, alignment, and on-screen coverage.

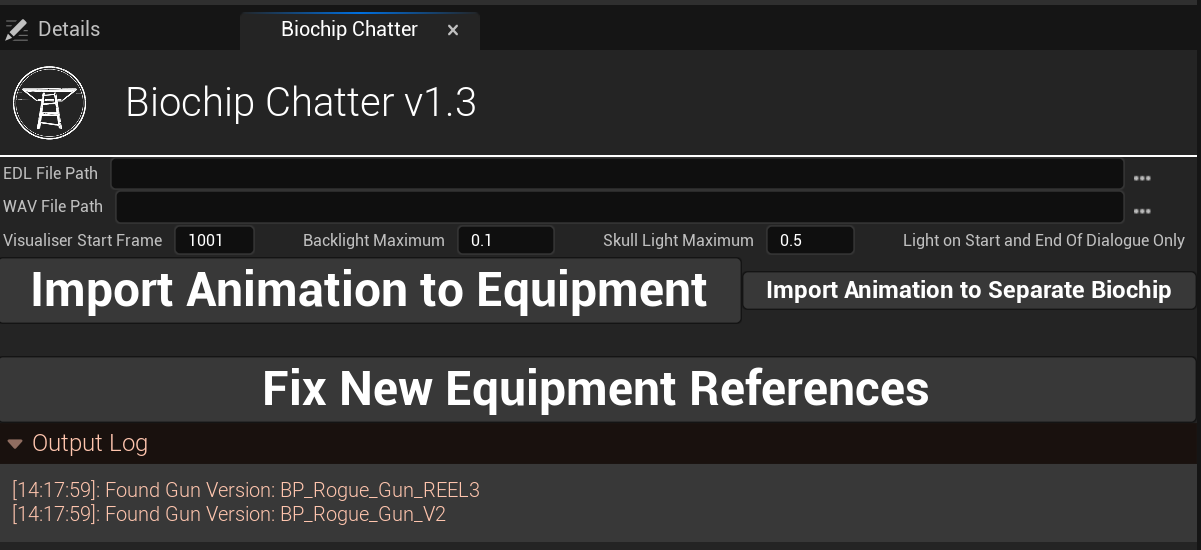

The automation is driven through an Editor Utility Widget where the user selects two input files: an EDL containing editorial timecode for speech, and a WAV file used to drive the on-screen audio visualiser. When executed, the tool automatically imports the WAV file into a dedicated Biochip folder within the shot’s Anim directory, creates a Synesthesia NRT asset in the same location, and assigns the WAV file to it so the audio can be analysed and used in-engine.

The widget provides two execution paths depending on context. One path automatically applies the setup to equipment-mounted biochips by parsing the equipment name from the EDL filename and resolving it to the correct child Blueprint, whether the biochip is on a backpack, helmet, or gun. The second path applies the setup to a standalone biochip actor when it is not mounted on equipment, while still ensuring the correct backpack, helmet, or gun display logic is applied based on the EDL naming convention.

The widget provides two execution paths depending on context. One path automatically applies the setup to equipment-mounted biochips by parsing the equipment name from the EDL filename and resolving it to the correct child Blueprint, whether the biochip is on a backpack, helmet, or gun. The second path applies the setup to a standalone biochip actor when it is not mounted on equipment, while still ensuring the correct backpack, helmet, or gun display logic is applied based on the EDL naming convention.

Using the EDL timecode, the tool keys the jaw state in Sequencer so the jaw opens and closes dynamically throughout speech. The backlight follows fixed, show-defined timing logic: it turns on shortly before speech begins, remains active while speech is ongoing, and only switches off once the jaw has remained closed for a fixed number of frames. The entire process is designed to be re-runnable, making it resilient to editorial updates and cut changes without requiring manual cleanup or rework.